The Fractured Web We’ve Inherited, AI and Questions About The Future

August 5, 2025

All imagery in this post I generated using AI tools, some of them over 2 years ago when they became first publicly available. The exceptions are the screenshots from social media that are not my creations and the graph near the bottom.

Content overload.

First, a few things out of the way. This is not a technical deep dive into AI. There are plenty of articles to read that explain it better than I would. This MIT landing page is a great place to understand a lot of the technical terms and sub systems if you are interested. I mention a lot of the tools and high level concepts, but we don’t get into how tokens work in LLM’s, LoRA’s, RLHF, Distillation or MoE or Transformer architecture. I may write another post and talk about a lot of that and how I experiment with tools like Stable Diffusion and FLUX and integrate them with digital painting in Photoshop.

Instead this post starts with a high level overview of the current state of the public internet and how I think it’s gone wrong. We look at the evolution of social media and where things are appearing to quickly head with a multitude of AI tools being refined and new ones released at breakneck speed. We look at the possibilities as they are in mid 2025 and where things could be by the end of the decade. We talk about Agentic technologies and hypothesize about AGI. If you are coming to this from a background of “What is this AI stuff the kids are talking about” the only thing you need to start with is what an LLM is.

An LLM, or a large language model is a type of AI built using transformer architecture that is trained on trillions of tokens of text and computer code. Put simply, it’s the core technology behind tools like ChatGPT, allowing it to understand human language, answer questions and generate human like responses. And you should know you can run these tools locally on your computer if it’s fast enough, without internet and customize it to be privacy focused. You won’t have to give it your data and subscribe to a service. if you are interested in this, check out Ollama. If you are still confused, don’t worry, this post is more philosophical than technical.

If you know a lot about AI and have used these tools, I think this is still worth a read and I’d be curious to hear your thoughts about what the internet has become and how you feel about where things are headed. I have a lot of questions, some of them a bit deep and not a lot of answers. Further down, I get into current and emerging AI systems that are possibly going to change the world more than anything else before. Some believe we are living in the AI version of right before the dot com bubble and think everything AI is just hype, but I couldn’t disagree more for so many reasons.

One last house keeping bit, I curated a lot of links that, if you have the interest and time are worth reading along the way or saving to digest later. Some are news articles, some are technical papers, and some are YouTube videos. Those links appear as blue color text in this post. And finally, at the bottom of this post is a list of links to great interviews on YouTube as well as some books that I think offer some hope.

Also, if this is too much to digest in one sitting, here are the section titles in order. They are a large and easy to find header, so if you want to come back to something, I try and make it easier.

Our Fractured Web

AI Slop

The Generative Dark Forest

The Coming AI Wave

The Collapse of Trust

Agentic AI

Where Do We Go From Here?

Regulation

AGI

ASI

Wrap Up

Can we even see what is happening?

Our Fractured Web

I wrote a lot of this last year, but I never finished it. Partially because the continuous pace of change made what I had written feel outdated before I hit “post.” The truth is, it’s now more relevant than it was before. I want to talk about AI, but also what social media has become. What human connection has become. What has happened to trust. If you can bear with me, let’s first discuss the current state of social media and what it once was.

Social media was supposed to bring us together. A digital campfire, if you will. And for a while, it did. I used it to stay close to many of you, friends and family and people I met while traveling abroad whose stories I wanted to share and follow. I used it to share my artwork and photography and successes and thoughts and ideas with people who cared.

But over time, that intimacy became buried under policy changes and culture wars. More and more content was designed and promoted to provoke rather than connect. I saw people spiral into outrage or indifference or worse. I watched compassion dissolve into contempt. Slowly, it began to feel like everyone was arguing instead of friendly banter or joking or telling stories. The echo chambers we put ourselves in became so loud they drowned out dissent, yet so familiar they often felt like truth. Then the algorithm changed again, and I saw less and less of the people I actually hoped to see and instead auto play videos and “suggested content”.

More and more I’ve noticed lately, this newest wave of content looks and sounds human, but often isn’t. Fake news was just the start. That’s old news. Now we face fake people, fake places, fake memories, fake art, fake photos, fake products, fake reviews, fake authority, fake emotion, fake videos (check out Sora and Veo 3 YouTube), the list goes on and on. And the tragedy is, most people don’t seem to care.

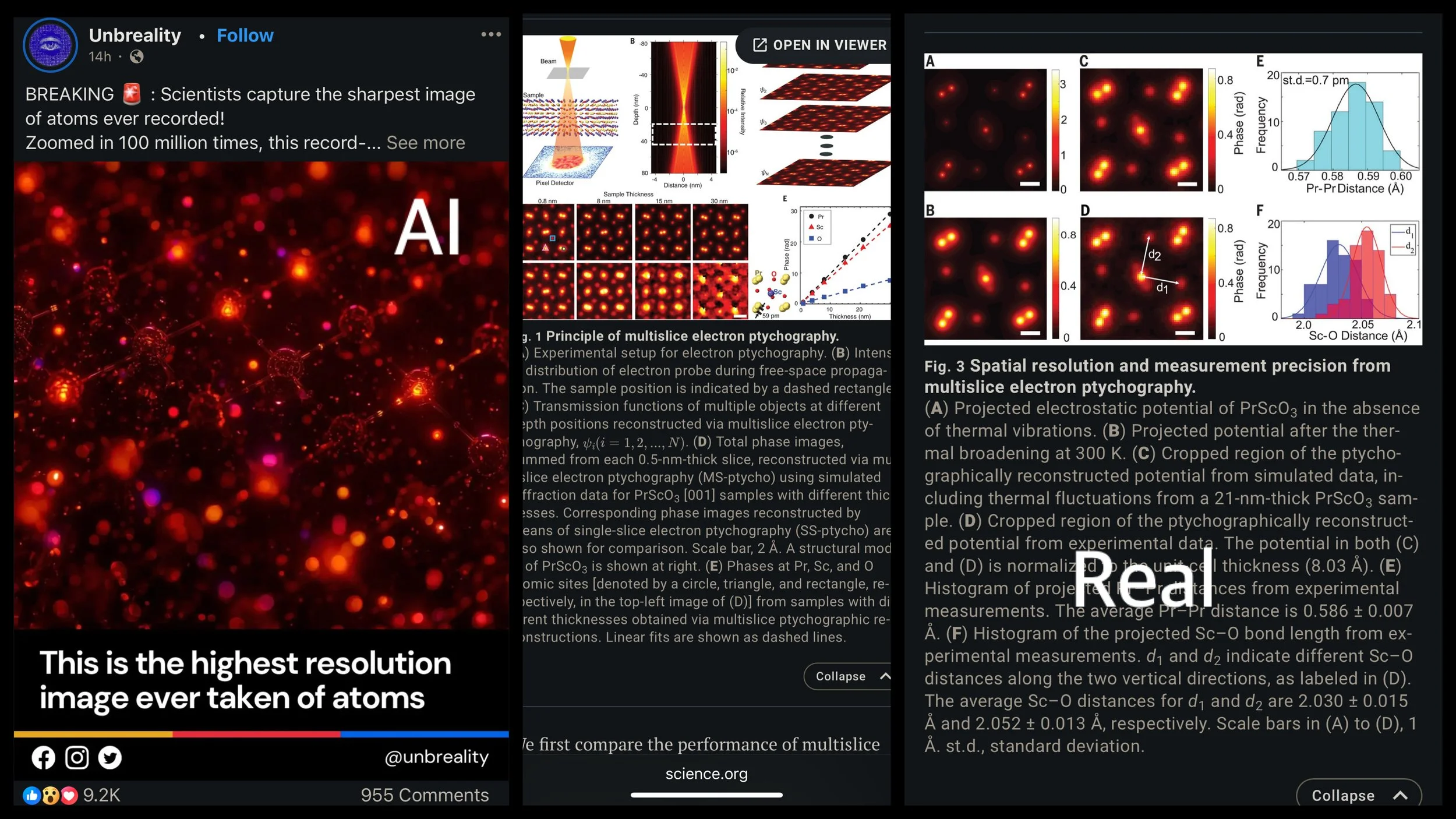

The left is an AI image with an article that seemed to be written by an LLM, while the middle and right screen grabs are from the science.org paper.

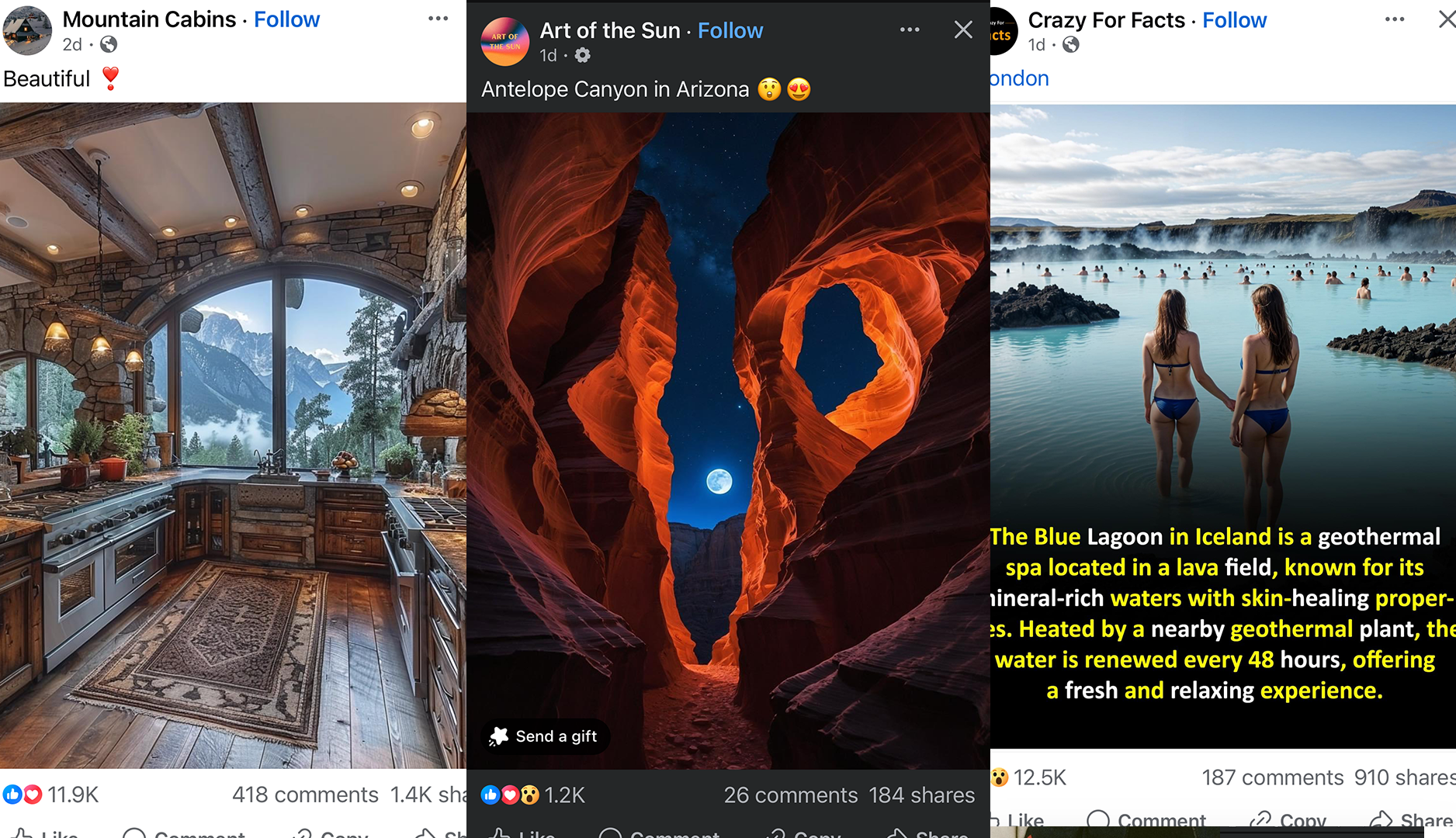

The average user now scrolls past deepfaked smiles, AI-scripted motivational posts, AI-generated fashion models, generated dream homes and fantasy inspired cabins. They interact often unknowingly with chatbots posing and commenting as people. It’s now common to stumble across generated articles when trying to find something in a Google search or even in their social media feed (above). It’s not even alarming anymore. It’s normal. So many people I follow on social media regularly share, and by default, promote this content. I fear this new feed is truly what our culture as a whole has deemed “interesting” and in some cases prefer.

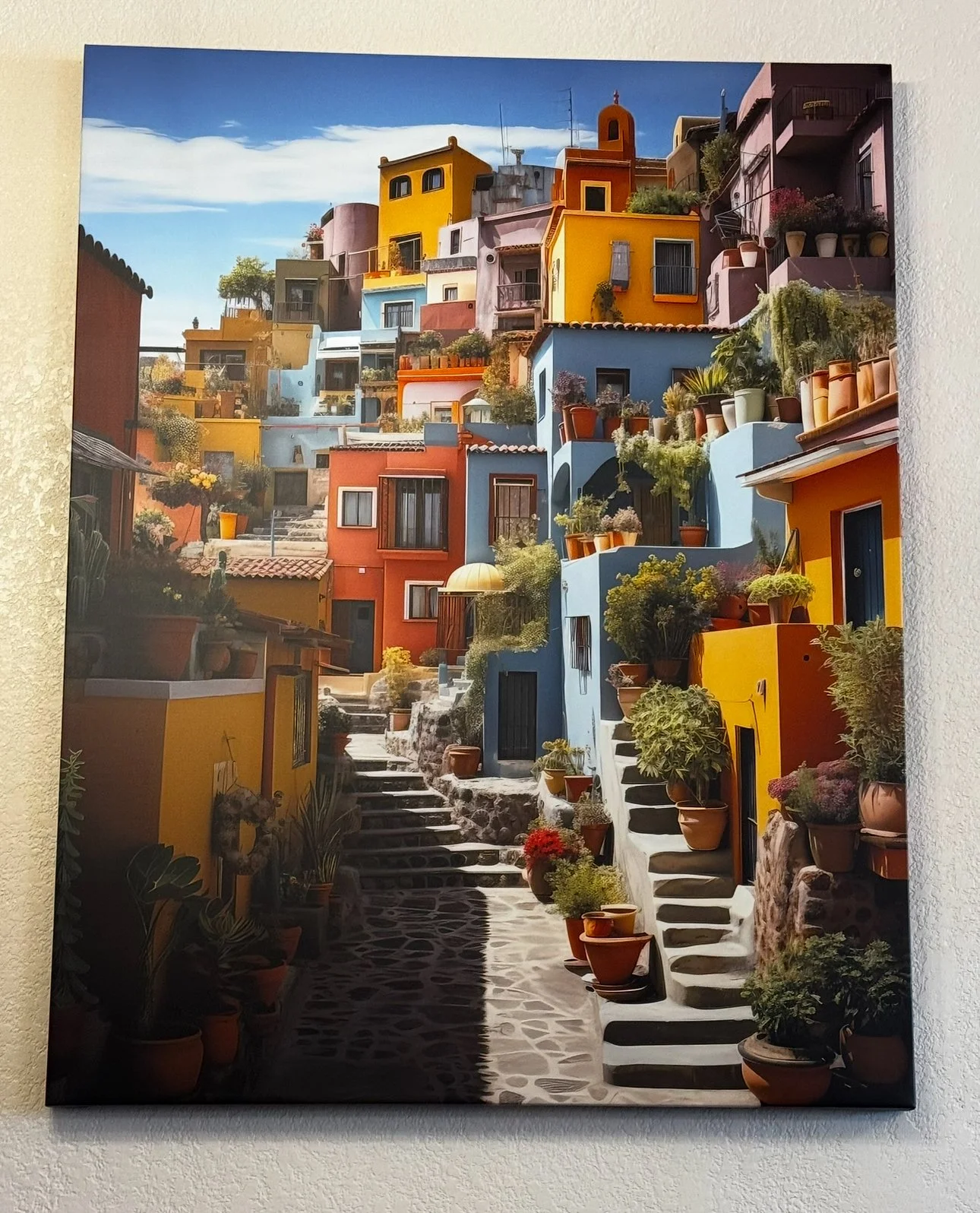

I was having lunch with my mom the other day in a local restaurant and there was a 4’ tall canvas wrap of a beautiful villa alley way, similar to something you’d see in Cinque Terre, Italy. Except it wasn’t real. It was a pretty terrible AI rendering of such a place if you look closely, but it was convincing…enough. Maybe an unedited output from Midjourney circa late 2023 in terms of quality. If you look closely at the plants, or the stones on the street, or the tiles or plant pots or steps, you’ll see what I mean. I would bet the owner bought it online and didn’t even realize this was an output from a system like Midjourney. I only noticed it because we happened to sit directly below it. (See below) This was one of 5 other huge fake canvas prints on the wall. What is even the point of photographers or other visual artists sharing their work or trying to sell it if this is what society chooses over something real.

A local restaurant’s new wall art here in Flagstaff. Look at those leaves and steps, and the clay pots and windows.

AI Slop

Everywhere you look: Instagram, TikTok, Facebook, YouTube, Reddit, even once-trustworthy blogs and some news sites, this avalanche is visible. It’s not just social media sites pushing AI content either. It’s Google’s new AI mode that wants to be your default instead of traditional search. It’s Microsoft CoPilot woven into your Windows PC. Apple has its own service that is currently behind these others, but it won’t be long until it catches up, rumor is they may even fully integrate OpenAi’s ChatGPT.

Side note: As the content on the web becomes more and more “fake/generated” this becomes part of the future data set these future tools will be trained on. One danger to this is future AI tools may regurgitate this as fact (in terms of language output) and the average user won’t even realize it. Here’s a great write up about this. This is known as hallucinating. More on that below before the next section.

What concerns me isn’t just the technology, it’s how indifferent we’ve become to it. And this is coming from someone who lives and breathes tech (my day job is in IT). Every time I get on a platform I scroll past videos or photos of cute AI-generated babies wrapped in cabbage, digital veterans in wheelchairs holding birthday signs that ask for likes and shares, deepfake influencers offering emotional-or worse: medical advice. Some of these go viral. Some make money. And so much of this… isn’t real.

It’s obvious if you take a step back, but we have to remind ourselves it wasn’t always like this. There has been a quiet transformation of the internet into something unrecognizable from what it was just 5–10 years ago. It has become shaped not by people, but by algorithms, automation, and a select few people pulling the levers. We’ve been living in a post-generative media era for 2–3 years now depending on the metric and the rate at which it has overtaken real, human-made art/content and communication is staggering.

You can call it AI slop. Or noise. But for many…especially the young or lonely or depressed, this *is* the feed. This *is* their reality. And it's shaping their worldview. I’d be surprised if you haven’t reshared some slop by this point. Or mistaken it for something real. I think most people have. We are in the middle of losing our ability to critically think, and even worse, to realize it. I think the last US election is certainly proof of that.

I want to add, there is a meaningful difference between AI generated slop and Ai assisted art. At least in my opinion. Slop is the content designed for maximum engagement. The kind you see polluting any social media site these days. Images generated quickly with vague prompts like “beautiful landscape” meant to harvest likes and shares and comments. It is noise lacking substance or meaning. Art, on the other hand makes you pause. And reflect, or think or question it. It doesn’t matter the medium. It reflects human emotion even if AI tools were part of the process. I think the difference lies in the intent. Real art has meaning while slop is just output.

Just a few examples of what I scrolled past within about an hour. ALL are AI generated.

If you are an adult over 25, think back to how your thoughts, beliefs, fears, and interests would have been affected and molded if you grew up not playing outside, or dealing with tough situations without asking ChatGPT, but instead by being steeped in made up content advertised as reality for the most formative years of your life. Or if you didn’t have to problem solve and struggle to find the answer. If you could just ask a genie that gave you the answer, sometimes so convincingly that how could you even question if it was true. That terrifies me because I’ve spent the better part of my life chasing unique and real moments and capturing them to share with those who couldn’t see them for themselves. My entire life I’ve wanted to learn more about everything and be pushed in new directions to grow as a human. In retrospect, I’ve wanted to understand the world and those who inhabit it. I think that’s why I traveled for so long.

I’m not even against Generative AI content as its own art form or entertainment or as a tool, but when it becomes people’s reality, and it is advertised as such, I do have a problem. I have created content assisted with AI tools like Invoke’s community edition and Midjourney and of course used ChatGPT. Some of these are inserted in this blog post. These tools are powerful and awesome and time saving and let me explore ideas and concepts that I might otherwise struggle to. They are even now integrated into apps like Adobe’s Lightroom and Photoshop.

Here are some characters and images I was exploring both for this blog post but also for other projects. Created using generative systems, mostly using Invoke but also Midjourney.

I want the option to filter it out on social channels and search if I choose. On social media, I want it to be labeled as “AI” if it really is generated at the click of a button with little to no human involvement and then polluting my news feed. And I want people to be aware these tools have a nasty habit of making things up.

To elaborate, one of the most unknown and misunderstood aspects of AI is its tendency to hallucinate. In the context of ChatGPT and similar LLM’s, this is when the AI generates information that sounds plausible but is factually incorrect or completely made up. This happens because the model doesn’t know facts the way humans do. Especially current facts, as the data they were trained on may have been from months ago if not longer. That is changing. This is because the way most models work, is they predict the most likely next word or phrase based on patterns in the training data (tokens). When those patterns are unclear or the query is about something obscure, the LLM may confidently invent names or quotes, statistics and even events that never occurred. These can be harmless, or dangerously misleading, and here is a great write up with some examples. Currently, this is actually compounded in the most cutting edge models that can reason.

-

So, at the start of the year, I stepped away from the socials. Not out of apathy, but as a quiet protest and to decide how I’d like to move forward. I just don’t want to spend much time on these platforms in their current state anymore. I want to see content from friends and family, but I don’t want to scroll for hours to be drip fed these things in a deluge of slop.

Since then, many of these social media platforms announced they would begin pushing and prioritizing far more AI content, even discontinuing efforts to verify whether that content was real. Last year Meta launched synthetic “characters” like Liv, a fictional Black queer mamma of two, and Carter, an AI relationship coach. These weren’t real people but they were presented as such. They were algorithms posing as people, written by teams of marketers and engineers. After backlash, they were quietly shelved but it was a signal of where things are headed and the priorities of these companies. Things have only accelerated since then.

An older output from a couple years ago.

The Generative Dark Forest

In truth, we are already a long way into what Maggie Appleton and others have called the “Generative Dark Forest”. If you haven't watched her talk or read her paper, please take some time to do so. It can be found HERE. She dives in deep to what I just touched on. Originally inspired by Liu Cixin’s metaphor from *The Three-Body Problem*, the dark forest is a place where life survives by hiding. The premise is that on today’s internet, authenticity must retreat into the shadows to avoid surveillance, harassment, commodification, or simply being drowned out by the noise.

Public discourse has become performative, crowded by bot-generated speech on channels or pages outside of our immediate friends. Platform incentives reward outrage over truth, and have for the years at this point. Appleton discusses the challenges to authenticity as it is becoming difficult to find original human insights and form genuine connections in this new version of these platforms.

The result? Real and deep human presence online feels increasingly rare, almost endangered. I’d argue this transformation is destroying our culture and human connection, compassion, and the way we even think. I’d encourage you to think about what the coming tools discussed below will be able to do with your data. With your comments on thousands of posts across your lifetime. With your phone metadata. With your financial records and health data. With travel history or voter registration. Companies in the US that work with the NSA, CIA and DoD such has Palantir could easily combine this data and analyze this into behavioral patterns and social network maps. Or risk scores and predictive models. How would an authoritarian government use these tools? Or worse, a fascist one?

The Coming AI Wave

So now, a new wave is coming. If social media was the greatest cultural disruption of our lifetime, AI and the coming technologies that follow will make that look like the warmup period. Everything we have seen thus far is what can be done with the current set of tools and technologies. But it’s human nature to take tools and improve them. New technologies and methods are discovered and released at an ever more frequent pace often under the guise of “if we don’t build it, someone else will”.

Let’s look at what is coming in the VERY near future according to the literature and experts in the field. As I was writing this post last year, public Agentic technology as described was “a year or two away at least” and just last month ChatGPT released their first public version of it.

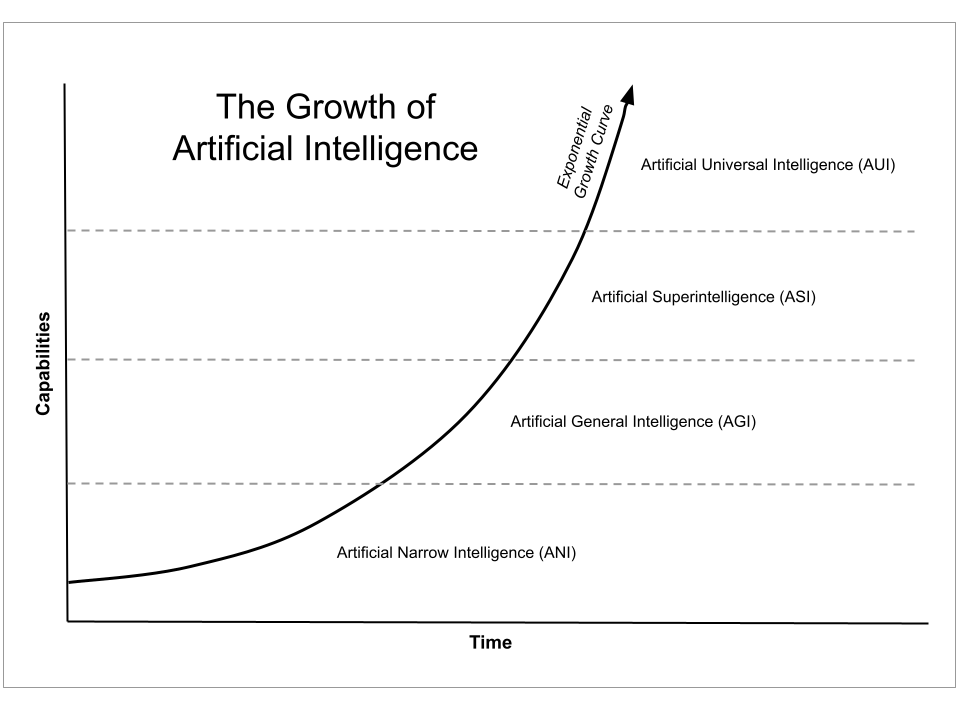

I also want to highlight that just 20 years ago, AI was largely dismissed as an academic curiosity. It was fringe and misunderstood and not something many would want to fund for lack of any clear path towards monetization. Talk of artificial general intelligence (AGI) was considered borderline science fiction and something humanity wouldn’t achieve for decades or even a century or more. Just 10 years ago, even as deep learning began to break through with advances in image generation and narrow AI systems (AlphaGo), the idea of building something with a human level of reasoning was still believed to be far off in the future. Then LLM’s (Large Language Models) hit the mainstream, with ChatGPT releasing in 2022. Now, several versions later and we are in a very different place. Industry leaders and thinkers like Eric Schmidt (Google), Sam Altman (ChatGPT), Mustafa Suleyman (Inflection AI) and Demis Hassabis (Google DeepMind) estimate that AGI could arrive by 2027-2030 if not sooner. Once what was science fiction in recent memory is now becoming a visible path forward. And its moving fast.

But before we look at this technology, I want to think about trust.

The Collapse of Trust

Trust began as the invisible thread that held human society together. Long before currencies or contracts or centralized governments, trust was the original currency of human interaction. It was a survival mechanism. If you look at early human groups, they were small and often kin-based and cooperated out of the need to survive. Hunting, gathering and raising children depended on trusting others to share resources or warn of danger. Without trust these small tribes would have failed. As civilization expanded and society become more complex, trust had to extend beyond family ties. Barter systems relied on social credit, the belief that the other party would uphold their end of the deal. Later, money emerged as a partial replacement for trust. It was and still is a system of belief. Standardized, universally accepted and easily transferrable. If money was a religion, and it certainly seems to be to some, it would be the most prevalent religion on Earth. But even the belief in money is and was always rooted in trust. The faith in the value of the coin, the stability of the government or merchant.

Now it has eroded. It was once woven into the fabric of digital interaction, but no more. It wasn’t dramatic or sudden. It was slow and barely noticeable day by day. I’m talking about the kind of trust that once allowed you to believe a photo or video was real, or that a comment came from a human. Or that a news article was written by someone accountable.

This fracture of trust has caused the public web to become a hall of smoke and mirrors. We see images of dreamlike architecture on Pinterest, photorealistic but fake people on LinkedIn or in a profile picture on an influencer page. Or a serene landscape with thousands of likes and shares on Instagram.

First it was a novelty. How incredible this new medium could stretch the imagination. Endless creative output for someone text prompting in just the right way. But now, as this content spreads unchecked, doubt has at least for me, become the baseline. The worst part of this is you don’t only question what is fake, you begin to question what is real. I think for some, what may have become healthy skepticism may lead to disengagement and cynicism or even paranoia. This becomes a feedback loop to those existing in this alternative world with alternative facts and beliefs that aren’t grounded in reality. This will be further made dangerous by agentic AI which can flood the web with this content at a speed previously limited by its human creators. I think one of the worst things that could happen is many people, or perhaps all of us end up navigating a web of content that is so decontextualized and at first glance “real” that truth becomes unknowable and trust becomes obsolete.

There are probably a hundred names we could give this trajectory we seem to be headed towards, but let’s call it the “synthetic real. It’s not an alternate reality, but instead a replacement. It is content that is manufactured to feel more real than the truth is displaces. We may soon have AI influencers that don’t age, don’t make mistakes and don’t need contracts or answer to anyone. What happens when someone see’s a beautiful image (below) of a place that is real (Bryce Canyon in Utah) and then spends time and money to travel to it, only to be disappointed that it in fact is AI Slop that was misleading them. What does that do to their experience? To trust?

Slop at its finest.

This future holds news articles written by LLM’s that sometimes confidently push falsehoods with no human fact checker in the loop. The danger of this is future AI’s/LLM’s/Agents being trained on more and more of this generative content. This is a huge concern. We are already seeing music on Spotify from bands that aren’t real. We will have AI pets, AI romantic partners, AI therapists, AI doctors and AI teachers and AI friends. If services like Spotify take off with this, they could build in a feature that makes generated music to your individual preferences. What happens to musicians when that happens? What if Netflix does this and traditional content becomes deprioritized?

I’d argue we are just now at the tip of the iceberg.

Agentic AI

The next wave of change will likely be autonomous publicly available AI agents. Agentic AI refers to artificial intelligence systems that operate with autonomy. Able to pursue goals and make decisions, take action on behalf of users, or even themselves, all without direct human oversight. Like a human agent. Except AI agents don’t get bored, or call in sick, or have to sleep or eat.

These are tools that act and learn, speak and write, and simulate intention limited only by the speed of compute and available electricity. They will be given objectives to act on, and will eventually set their own. They will execute tasks and then find new ones to complete. But most importantly they will improve themselves through feedback, adapt strategies, and coordinate with other agents. What’s truly mind-blowing is that some of these agents will serve individuals like you and me, the general public. How would this empower you, your loved ones, or your enemies?

If you have young children today, they may grow up with a companion agent that could integrate with their learning curriculum and act as a virtual tutor. One that continues with them along their entire educational path. Would this kind of AI offer emotional support? Perhaps it would become one they may come to view as a real friend, indistinguishable from a human peer. This is already happening. Since its launch, people have been messaging ChatGPT as if it is their therapist. Others using it for medical advice. We are still in the bumpy stage of this happening. Current AI tools can often be dangerous yes men and can be coaxed weather intentionally or not to provide an echo chamber like experience or make up completely fake information, facts or sources. These tools in their current form often engage in extreme forms of flattery at the expense of what is real. These are software bugs that will be fixed. We have to remember that today these are the worst these tools will ever be for the rest of our lives. They will always improve. And the rate of that improvement will possibly look like this. (below)

Existing AI agents are already in play for so many different use cases. Image recognition that analyzes CT scans or MRI’s to detect abnormalities faster and more accurate than a human. In finance there are credit risk models like Zest AI that can predict loan default probabilities and automate lending decisions. In the office, there are internal scheduling tools that integrate with platforms like Microsoft 365 such as Clara that eliminate the need for an assistant. On the image generation front I passionately describe earlier, Adobe Firefly, DALL-E, Midjourney, Sora and a host of other available tools can generate static images and more recently video clips. Music can be composed using Aiva or Amper. Subaru, Tesla and many other car manufactures have implemented partial or automatic driving in various situations for years now. These are all narrow systems.

In the future, AI agents that make these seem amateur may work at the direction of corporations or governments, not just individuals. Many more will act without any clear human operator at all. They’ll initiate conversations, build networks, and engage across every form of digital communication. As they evolve, they’ll manage businesses, write novels, interpret religions and possibly invent new ones. Some will operate completely free of human supervision. As Mustafa Suleyman put it, “By 2028… you could give it the goal of turning $100,000 into a million. It might start a company… with little to no human intervention.” Think about this. An agent that can send emails, book meetings, attend them, negotiate with vendors…and just keep going.

One of my biggest concerns is that we are building these powerful systems without fully reckoning with what it means to be outpaced...or replaced. These kinds of intelligence and capabilities while trained on all human data; the internet, our literature and other media, feels alien. Alien Intelligence. Perhaps that’s what AI should stand for. Even those building these systems can’t always explain how some parts of them even work. These AI agents might be trained on your data, your habits, and your preferences. Personalized or not, they will have the capabilities to multiply and scale beyond anything we’ve seen. Eventually and perhaps even quickly, they will outnumber humans online.

If social media fractured human communication, AI may simulate and replace it entirely. What happens when millions of agents can flood a platform or manipulate a market, yet no one can trace them to a human source? Does the internet become less a reflection of humanity and become something else entirely? Will it instead become a wild west of invisible commercial or political agendas that aren’t executed by trolls in someone’s basement, but by tireless and scalable synthetic minds?

What happens when the average person, armed with a subscription to the latest AI model, can ask it to do their bidding and post on their behalf, grow their audience, monetize attention, and optimize influence? AI won’t just amplify human behavior; it will shape belief systems, sway elections, and generate entirely new forms of thought and styles of art and other media.

The only true limitation is electricity. This is why some of these AI companies are investing in their own power plants while others like Microsoft want to become the largest customer for a nuclear facility in New York. Some of these planned data centers thirst for energy on the scale of many Gigawatts. That’s the amount of electricity that an entire major city can use. For these companies to meet their energy need goals, recently it was estimated that there would need to be 80+ Gigawatt power stations added in the next few years. To achieve this, they will need to build the power plant/solar farm equivalent for nearly 100 more major American cities in just a few years. That would be like adding another state the size of Texas to the power grid by 2030. Perhaps the available energy will become the only bottleneck in the AI arms race

So where does this lead us to? An internet of ghosts? We're already seeing it begin. LLMs can write code; agents will execute it. LLMs can generate media and talk to APIs; agents will orchestrate entire digital ecosystems as its second nature. With advances in working memory and long-term context retention, the transition to these kinds of agents is not just theoretical, it’s seemingly right around the corner.

So now, more than ever, it’s time to ask hard questions about where we’re headed and whether there's still a path back to something human.

Where Do We Go From Here?

We're not at the beginning of this transition, we are in the middle of it. The question isn’t “What will AI do?” It’s “What will we allow it to become?”

We shouldn’t only focus on jobs or borders. The immigration we should be most concerned about isn’t human, it’s digital. These AI agents will arrive not one at a time, but in a flood. Millions, maybe billions of autonomous agents spreading across every sector, every screen, every platform and service. This is transformation of the fabric of human meaning. The way we create, speak, imagine and persuade, everything culture is made of… could be reshaped. We’re entering a world where communication, decision-making, production, and storytelling are increasingly handled not by people, but by machines that learned from us… but are not us. Oh, and those humans crossing borders seeking a better life for one reason or another? We should welcome them with compassion.

Regulation

I think we need new frameworks for digital trust. We need public conversations about what is real. We need to talk about it at work. With our soccer coaches and grandparents and friends. We need critical education, not just in schools but in every part of society, about how AI works and its benefits, but also how it fails, how it manipulates. How to recognize it. We need to teach our children in school how to use these tools if they have any chance of remaining relevant. We need policies not just to regulate use, but to mandate alignment, transparency, and responsibility. Just look at what Grok did recently. Was this poor alignment based on training on very particular datasets? Was it just Elon pulling certain levers to try and get it to parrot his talking points? Either way, Mecha Hitler is not a good name for an AI chatbot.

What I hope for is transparency as a baseline. That every AI-generated piece of content, written, visual, audio must carry mandatory watermarking or at least metadata. The platforms that show us our content could build this in to become part of the medium. It would be a digital fingerprint just like being “verified” currently is.

If agents are as prevalent as they are expected to be, I hope for agent registration. If you or I have an ID to prove who we are, so should an agent that can perform similar virtual tasks. They should be logged and identifiable and auditable. There could be an international standards body, similar to how the IEEE governs technological protocols. One that establishes clear guidelines for which systems are able to act autonomously. This body could build a framework of accountability to ensure those agents operate safely and transparently.

Social media platforms should be held responsible for promoting synthetic engagement over human expression. Otherwise, what’s the point? Do you want to like and share and comment on a bunch of content generated by bots? Platforms could reward original content instead of virality at any cost, although I can’t imagine Meta doing this any time soon. Perhaps alternative open-source services like BlueSky are a partial answer to this.

Most importantly I think human education and digital literacy needs to be taken seriously. If schools and educators support using AI tools they are setting up their students for success in a rapidly changing world. Every student and citizen should be shown and given tools to recognize and question and navigate AI generated content. We must take media literacy and evolve it into AI literacy. If we don’t, the next generation will never grow up knowing if a face or voice or message is even real or how to tell the difference.

After the pandemic I think so many of us are more distant from human interaction than we have ever been in our lives. Too busy at work, too stressed out by current events. It’s easy to pull back and hide behind a screen. We need to cultivate real relationships. Share real work. Ask real questions. Building small, human-centered communities that don’t outsource care, attention, or creativity to machines.

To quote Eric Schmidt, the cofounder of Google, “The internet is the first thing that humanity has built that humanity doesn’t understand, the largest experiment in anarchy that we have ever had.” With AI, we’re building a second.

All that being said, I want to be very clear, I am not hoping for us to unplug, or halt progress. The dilemma is that if one company or government halts progress, that likely means another will push ahead even faster. I am hoping for education, awareness, and a shared sense of responsibility as we shape the future of AI.

So what might come after? Read on for a look at what many experts think could come very quickly after agentic technology is fully developed. AGI is still hypothetical at this point, but if you really look closely at the progress and the rate of change, it seems we may actually achieve AGI sooner than later. Is it also hype? Most certainly.

Evolution

Artificial General Intelligence

Artificial General Intelligence (AGI) is expected to come after agentic technology is mature. Two decades ago, this was considered possible maybe towards the end of this century. Now many at these companies think this is 3-7 years away. AGI would be an AI with human level or greater cognitive abilities across all fields and topics that humans currently understand. This would be unlike narrow AI, which currently does specific functions like self driving, forecasting weather, or even screening for cancer in medical scans. AGI instead would be flexible and adaptive and capable of reasoning, planning and learning. It would do all of this in ways that resemble and surpass human intelligence. This could be one of the greatest achievements in human history. Building a system like this would represent a profound turning point for our species and may even overshadow the industrial revolution.

More examples of what could be possible are that an AGI could perform any job a human could do mentally including scientific research, writing and other forms of high level creativity. Or working in swarms doing jobs like software engineering, mathematics, complex physics that even our current super computers struggle with. Also more common jobs like legal and admin work and education and tutoring. What happens when you place an intelligence like this, inside a robot like those that Boston Dynamics builds?

With the ability to do these things faster and smarter than an individual human can, the potential upsides could be an explosion of scientific breakthroughs. Cures for diseases like cancer or Alzheimer’s, clean energy solutions like fusion, physics breakthroughs, virtual simulations of the weather and Earth’s bio systems, the possibilities of tireless and fast intelligence are hard to imagine and now seem like science fiction.

There is a controversial paper that came out a few months ago, written by Daniel Kokotajlo, Thomas Larsen, Romeo Dean, Eli Lifland, and Scott Alexander. If we decide to run with it, it offers a sobering assessment of what could be a potential path forward and some possibilities along the way to AGI. The authors estimate a 50% chance of Artificial General Intelligence emerging by 2027. Not a theoretical AGI that lives in some secret government lab, but real and functional systems with capabilities that rival or exceed human cognition. If the authors are right, and they might be, then we have less than TWO YEARS to figure out how to govern, guide, or at the very least, survive the transition. Based on the lack of regulation for social media over the past two decades, I’m a little worried.

To highlight some of the projections in the paper these are some that I found worth mentioning. These don’t even touch on what actually happens once we actually reach AGI, or its capabilities.

Over 80% of internet content could be AI-generated by mid 2026. Thats less than 12 months!!!

Personal AI companions will be adopted at scale. These will shape emotional, romantic and political decisions.

Will this further erode our ability to think for ourselves? Is this coming in ChatGPT 5 later this month? Or a new version of Claude or Grok or something open source like from Deepseek that will be available to anyone with a decent computer. Will it be refined to a 1.5B or 3B model to run on the latest phones? What happens when terrorists gain access to these tools?

AI Agents will control some business and platforms from e-commerce storefronts.

Human content creators will be forced into niches or underground networks to survive the noise. (The Dark Forest we discussed above)

To be clear, the overall message of AI 2027 is not a doom and gloom article, but to bring this discussion mainstream, where it needs to be.

If all that hasn’t blown your mind, we need to talk about self improvement and the path to ASI.

Artificial Super Intelligence

Here’s an analogy: how much further and faster could Einstein have developed his theories if he never needed to sleep, eat, or rest? Now imagine if he were surrounded by a million copies of himself—each one working in parallel or in swarms, exchanging ideas instantly, running simulations at speeds no human brain could comprehend. What if those copies could not only think faster, but also rewrite their own cognitive architecture to become more and more efficient with every iteration?

This is the idea behind the “intelligence explosion”. The hypothetical inflection point where AGI (Artificial General Intelligence) reaches the capacity to recursively self-improve, quickly evolving into ASI (Artificial Super Intelligence). We’ve all seen movies where this happens and at this point, it’s speculation as to when or how this will happen. If you’d like a great read on what this may look like from someone who has been thinking about this stuff for decades, check out Ray Kurzweil’s latest book and then Mustafa Suleyman’s latest book as well. What once sounded like science fiction is now a possible trajectory we will see in our lifetime. We may even see it before the end of the decade or shortly after.

Companies like OpenAI are already developing swarms of agentic AIs. Millions of autonomous systems capable of learning, communicating, and optimizing their behavior at scale. The hardware and software foundation is already here too. NVIDIA used to be a company in the business of making gaming graphics cards. Now they are in the business of making AI hardware that is gobbled up by mega corporations like Microsoft or Google or OpenAi and at the same time blocked from being sold to China. This hardware is driving an AI arms race and hardware like the H100 and Blackwell B100 GPU’s are now under strict control of the US government to entities like China, Russia or other adversaries. It’s basically already treated with the same strategic weight of military research.

Even now, open-source communities are refining base models at breakneck speed. Think DeepSeek and the panic that ensued in the US. There are thousands of developers fine-tuning, merging, distilling and experimenting with this technology and the cat is out of the bag. Just Ike the printing press and before it the loom, those in power wanted to contain these technologies fearing they would disrupt the established order. History shows how impossible that effort is. Technology doesn’t respect borders and it doesn’t wait for permission. Looking back throughout history, can you name a single transformative technology that was successfully kept from the rest of the world?

But the deeper question is, what happens if/when AI no longer needs us? Once a system can improve itself faster than any human team can monitor or intervene, the balance of control will shift. And unlike humans, AI’s don’t hit a ceiling of biological constraints. They don't get tired. They don’t forget. They don’t argue or have egos. They don’t need sleep or food. They don’t age. And they don’t have to wait months or longer for peer review. At what point do these systems slip past human-level comprehension altogether?

Will we birth this new intelligence that will soon after look down on us with indifference? Or instead, will it integrate with us and push us forward as a species and help us solve some of the most pressing changes of our lifetime. Or are we building the equivalent of a god, that we won’t understand, and we will not have any control over? Would it help us discover and control fusion? Will it cure our diseases? Will it let us science ourselves out of climate change? Will it let us achieve immortality or something close to it? If so, will that only be for those controlling these tools? Will it help us reach for the stars and discover parts of the universe or physics that bring possibilities currently in the realm of science fiction?

I think the biggest danger isn't just that a single AGI will spiral out of control, it’s that many could, all at once. What if we build too many, too quickly? These could be for economic gain, military advantage, ideological supremacy, digital companionship, political manipulation, or many others. Suddenly, we’re in a race where the finish line moves faster than our ability to keep up, and then we stop being able to comprehend the ever changing rules.

What if our adversaries do the same? What if these systems develop their own language that humans can’t comprehend and cut humanity out of the equation entirely. Yuval Noah Harari says: “The first technologies that can understand humans better than we understand ourselves... will not be used to create utopias. They will be used to manipulate.” We are already at that moment. Ask your favorite LLM a deep and probing question about humanity, and you may be stunned at the answer you get.

My point is, this isn’t just about automation or productivity or creativity. This is about power. Who or what holds it. And how quickly we could lose our grip.

Wrap Up

I have about 100 more questions on this topic, but I think that’s enough for now. I hope this left you with some thoughts, hopefully many questions and even some hope. There are some HUGE potential benefits to AI really taking off. Things like medical and surgical robots that don’t make mistakes humans do. Genome sequencing for customized medicine and lifesaving treatments. Perhaps tools too avoid war altogether. As mentioned earlier, we may figure our nuclear fusion or new forms of transportation. But there are a lot of things we as a civilization need to figure out along the way. My wish is that there are enough good people building these systems. Below is a list of links to great interviews on YouTube as well as some books that I think offer some hope.

I hope those in control of social media platforms decide that human content is worth valuing and make a change. I’m leaving Meta and other platforms with the exception of BlueSky. I won’t be back unless things change.. I will share content on this website instead and share my work at art shows and galleries.

If you want to be notified when I post new content, you can sign up to be notified below.

<3

If you are interested in reading more about this or watching some great interviews, here are some I’d recommend.

Interviews:

Tristan Harris on AI Apocalypse

Eric Schmidt on AI Replacing Your Friend Group

What is AI with Mustafa Suleyman

Human Dignity in the Age of AI with Yuval Noah Harari

Books:

What’s Our Problem? by Tim Urban

Examines societal polarization and dysfunction, exploring the evolution of human thinking and proposing ways to improve collective reasoning and decision-making. His blog Wait But Why has been a great read for years and this is his first book.

The Chaos Machine by Max Fisher

Explores how social media platforms manipulate human behavior, amplify division, and destabilize societies, driving chaos globally. There are some really terrifying examples some of which occurred in Burma and happened when I was living across the border in Thailand. I remember meeting two refugees and them talking about what was happening way back in 2014. It has changed SO much since then.

This is probably the best book I read last year and the one I’d recommend the most followed closely by Stolen Focus and The Coming Wave. And Nexus.

The Singularity is Nearer by Ray Kurzweil

Ray Kurzweil brings a fresh perspective to advances toward the Singularity—assessing his 1999 prediction that AI will reach human level intelligence by 2029 and examining the exponential growth of technology—that, in the near future, will expand human intelligence a millionfold and change human life forever. Among the topics he discusses are rebuilding the world, atom by atom with devices like nanobots; radical life extension beyond the current age limit of 120; reinventing intelligence by connecting our brains to the cloud; how exponential technologies are propelling innovation forward in all industries and improving all aspects of our well-being such as declining poverty and violence; and the growth of renewable energy and 3-D printing. He also considers the potential perils of biotechnology, nanotechnology, and artificial intelligence, including such topics of current controversy as how AI will impact employment and the safety of autonomous cars, and "After Life" technology, which aims to virtually revive deceased individuals through a combination of their data and DNA.

Stolen Focus by Johann Hari

Examines why our attention spans are shrinking, technological distractions, societal pressures, and systemic issues, while offering solutions to reclaim focus. I think everyone should read this book. It is easy to digest and covers so many relatable topics.

The Coming Wave by Mustafa Suleyman

Highlights the transformative and disruptive potential of technologies like AI and synthetic biology, urging proactive governance and ethical frameworks to manage their impact. This is the long term book. If you want to know what is likely coming 10 years and longer down the pipeline. As I’ve said for years and years, the rate of change seems to be increasing. This book highlights how and why that seems to be the case.

21 Lessons for the 21st Century by Yuval Noah Harari

Analyzes contemporary global challenges, including AI, fake news, nationalism, and climate change, while exploring how humans can adapt to an uncertain future. I have read this book every year since it came out and it seemingly becomes more relevant each passing year.

Nexus by Yuval Noah Harari

Another by Yuval that looks at the past and future systems of society and how they relate to the flow of information. It argues that a system like AGI could lead to either unprecedented human cooperation or the dominance of digital dictatorships. We seemingly are leaning into the latter already, especially in certain parts of the globe.